As database administrators, we’ve spent years optimizing query response times, tuning buffer caches, and obsessing over execution plans. Now, with large language models becoming integral to enterprise applications, there’s a new performance metric demanding our attention: Time To First Token (TTFT).

Let me break down what this metric means, why it matters, and how you can apply your existing performance tuning expertise to optimize LLM inference in your organization.

What Exactly Is Time To First Token?

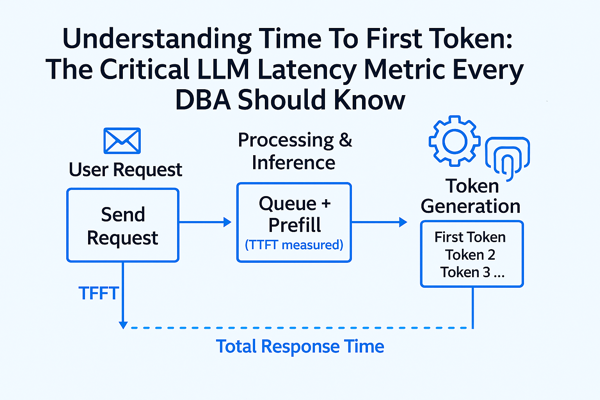

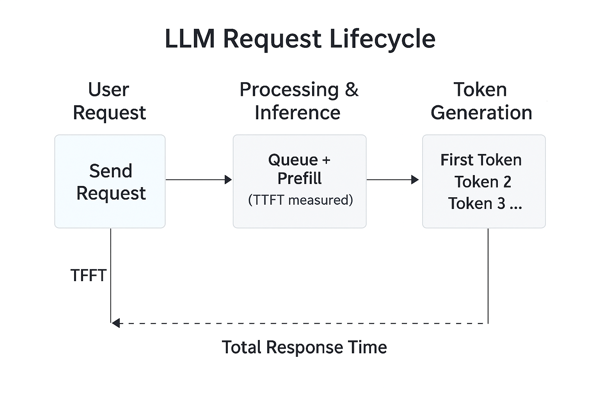

Time To First Token measures the elapsed time between when a client sends a request to an LLM API and when the first token of the response begins streaming back. Think of it as the “first byte” metric we’ve tracked for decades in web performance, but adapted for generative AI workloads.

Here’s how the timeline breaks down:

Key phases within TTFT include:

- Network latency – Round-trip time between client and inference server

- Queue wait time – Time spent waiting for available compute resources

- Tokenization – Converting the input prompt into tokens the model understands

- Prefill/prompt processing – The model processing all input tokens before generating output

- First token generation – Producing that initial response token

Why TTFT Matters for User Experience

From a user perspective, TTFT determines perceived responsiveness. When someone submits a prompt and stares at a blank screen, every millisecond feels like an eternity. This parallels what we’ve always known about database applications: users tolerate slower total completion times far better than slow initial response times.

Consider these real-world scenarios:

| Use Case | Acceptable TTFT | Impact of High TTFT |

|---|---|---|

| Interactive chatbot | < 500ms | Users abandon conversation |

| Code completion IDE | < 200ms | Disrupts developer flow |

| Document summarization | < 2 seconds | Acceptable for batch feel |

| Real-time translation | < 300ms | Conversation becomes awkward |

The psychological principle here mirrors database tuning: streaming results provide feedback that work is happening. A query returning rows progressively feels faster than one that buffers everything before displaying results, even when total time is identical.

The Technical Components Affecting TTFT

Let me walk you through the factors that influence this metric, organized by where you can make the biggest impact.

1. Model Architecture and Size

Larger models with more parameters require more computation during the prefill phase. The relationship isn’t always linear, but generally:

- 7B parameter models – Lower TTFT, suitable for latency-sensitive applications

- 70B+ parameter models – Higher TTFT, better quality but slower initial response

- Mixture of Experts (MoE) – Can reduce TTFT by activating only relevant parameters

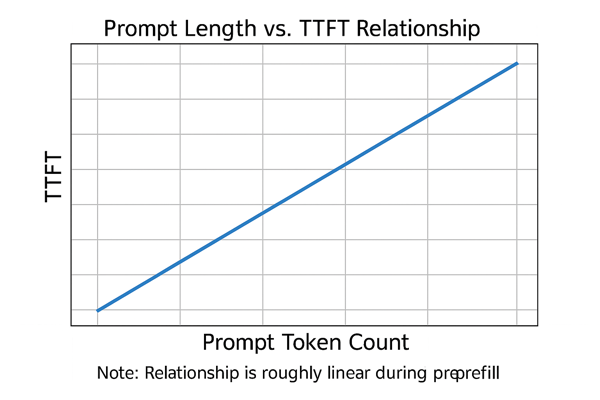

2. Input Prompt Length

This is where your DBA instincts should kick in. Just as longer SQL statements with complex WHERE clauses take more time to parse and optimize, longer prompts require more processing.

3. Infrastructure and Hardware

The compute environment plays a significant role:

- GPU memory bandwidth – Determines how quickly model weights load

- GPU compute capacity – Affects parallel processing during prefill

- Batch size – More concurrent requests can increase queue time

- KV cache availability – Cached key-value pairs reduce redundant computation

4. Serving Framework Optimization

Modern inference servers implement various techniques to reduce TTFT:

- Continuous batching – Processes new requests without waiting for batch completion

- PagedAttention – Efficient memory management for the KV cache

- Speculative decoding – Uses smaller models to predict tokens in parallel

- Prefix caching – Reuses computation for common prompt prefixes

TTFT vs. Other LLM Performance Metrics

Understanding where TTFT fits in the broader performance picture helps prioritize optimization efforts:

| Metric | What It Measures | When to Prioritize |

|---|---|---|

| TTFT | Time to first token | Interactive applications |

| TPS (Tokens Per Second) | Generation speed after first token | Long-form content generation |

| Total Latency | Complete request duration | Batch processing workloads |

| Throughput | Requests processed per time unit | High-volume API services |

For most user-facing applications, TTFT deserves primary focus because it directly impacts perceived performance.

Practical Optimization Strategies

Here’s where we can apply proven performance tuning principles to LLM inference:

Prompt Engineering for Performance

Reduce unnecessary context. Every token in your prompt increases prefill time. Review system prompts and few-shot examples critically:

- Remove redundant instructions

- Compress examples where possible

- Consider whether all context is truly necessary

Implement Intelligent Caching

Cache at multiple levels:

- Semantic caching – Store responses for semantically similar queries

- Prefix caching – Reuse KV cache for common prompt beginnings

- Result caching – Cache complete responses for identical requests

Right-Size Your Model Selection

Match model capability to task requirements:

- Use smaller, faster models for simple classification or extraction tasks

- Reserve larger models for complex reasoning where quality justifies latency

- Consider model distillation for production workloads

Infrastructure Considerations

Optimize your deployment:

- Deploy inference servers geographically close to users

- Use dedicated GPU instances rather than shared resources for consistent performance

- Implement request queuing with priority levels for different use cases

- Monitor and set alerts on TTFT percentiles (p50, p95, p99)

Monitoring TTFT in Production

Establish baseline measurements and track trends over time. Key practices include:

- Instrument your API calls to capture timestamps at request send and first token receipt

- Segment by prompt characteristics such as length, complexity, and use case

- Track percentiles rather than averages since outliers matter for user experience

- Correlate with infrastructure metrics including GPU utilization, memory pressure, and queue depth

The Bottom Line

Time To First Token represents the intersection of user experience and infrastructure performance in the LLM era. As DBAs, we bring decades of experience optimizing response times, managing resource contention, and balancing throughput against latency. These principles translate directly to LLM performance tuning.

The organizations that excel at AI deployment will be those that treat inference optimization with the same rigor we’ve applied to database performance for years. TTFT is your starting point for that conversation.

What challenges are you seeing with LLM latency in your environment? I’d welcome the opportunity to discuss approaches that might work for your specific use cases.

Bobby Curtis

I’m Bobby Curtis and I’m just your normal average guy who has been working in the technology field for awhile (started when I was 18 with the US Army). The goal of this blog has changed a bit over the years. Initially, it was a general blog where I wrote thoughts down. Then it changed to focus on the Oracle Database, Oracle Enterprise Manager, and eventually Oracle GoldenGate.

If you want to follow me on a more timely manner, I can be followed on twitter at @dbasolved or on LinkedIn under “Bobby Curtis MBA”.