Initial Load from Tandem (HP-UX) to AWS Kafka

Working with a customer where we needed to move data from a Tandem (HP-UX Guardian) system up to an AWS EC2 platform that will eventually end up on an AWS MSK Cluster (Kafka). The concept is pretty straight forward; however, I will say that putting it into practice provided to be a challenge. The biggest part of this challenge was the initial load process that should be used. Oracle GoldenGate is the best replication tool on the market, but the one thing that it lags in is the approach of initial load.

The initial load process for Oracle GoldenGate (both Oracle and Heterogenous) comes in many different options. The documentation for initial loads have changed over the years as well as the approaches. With the latest release of Oracle GoldenGate (21c), this hasn’t gotten any better. But if you want a reference point, you can review the steps in Chapter 11 of the Using Oracle GoldenGate with Oracle Database (here).

To perform this initial load from a Tandem system to Kafka, we used two different binary sets. In this case, we were using the following:

- Oracle GoldenGate 12c (12.2.0.1)(Classic)

- Oracle GoldenGate 21c (21.5.0.0)(Microservices)

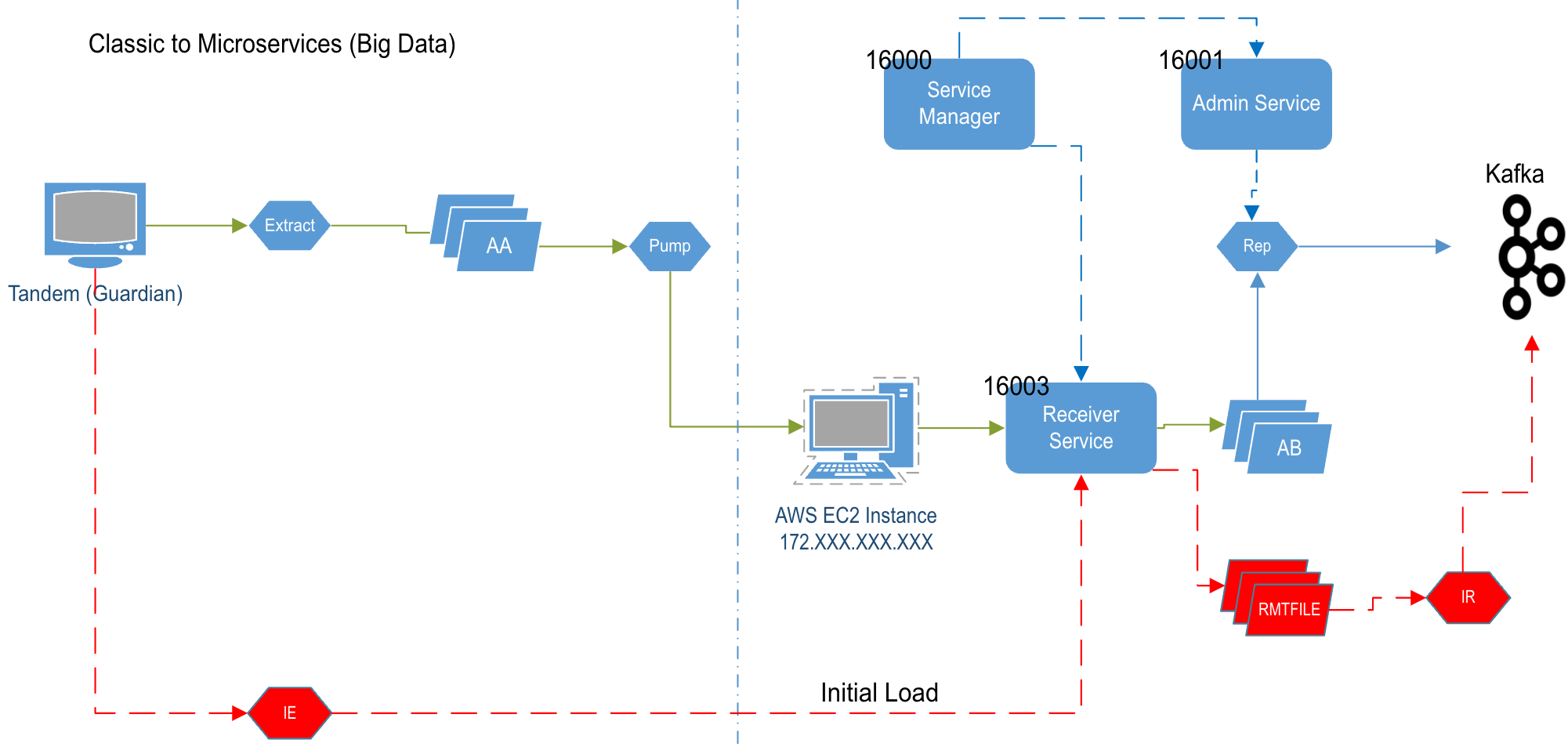

As you may have noticed, the implementation we were working with is a Classic to Microservices architecture. Oracle GoldenGate (Microservices) is the latest release of Oracle GoldenGate and the direction that Oracle is pushing for data integration strategies.

Below you see the basic concept of this architecture. The Classic side of the architecture is straight forward when it comes to Oracle GoldenGate. When the architecture transitions to the EC2 side, Oracle GoldenGate (Microservices) has a few more moving parts that should be managed. For the purpose of the initial load, the only service that is needed is the Receiver Service (port 16003); more on this shortly.

In the diagram above, we are only going to be discussing the items in red. These items represent the initial load process and how this process was accomplished between a Oracle GoldenGate (Classic) running on a Tandem (HP-UX Non-Stop) and loading data into Kafka using Oracle GoldenGate (Microservices). The “direct load” initial load process was followed for this configuration (this approach is not covered in the 21c docs…just an FYI).

Tandem (HP-UX Non-Stop):

First thing that needs to be done is configuring the Initial Load Extract on the Tandem side. The following parameter file was used:

EXTRACT eil

RMTHOST <host/IP address>, MGRPORT 16003

RMTFILE <rmtfile>

TABLE *.*.*;

This initial load extract looks pretty standard. We are telling Oracle GoldenGate (Classic) to read all the data from the tables that were in the TABLE line. Essenstally doing a “SELECT *” and pull all the data. Then move that data across the network to the remote server and begin writing to the remote file. Oracle GoldenGate (Microservices) begins writing the remote file, but then immediately presents a “broken pipe” error. This behavior caused a lot of confusion. After opening an SR and talking with Oracle resources, it was noted that the Tandem default settings for the buffer needed to be changed.

Note: By default, Tandem sets its TCP/IP buffer to 64K.

Apparently, using the default settings on the Tandem didn’t work. To work around the “broken pipe” issue, we had to set the TCPFlushBytes and TCPBufSize to less than 28K. This resulted in our extract parameter file being changed:

EXTRACT eil

TCPFlushBytes 27000

TCPBufSize 27000

RMTHOST <host/IP address>, MGRPORT 16003

RMTFILE <rmtfile>

TABLE *.*.*;

By adding the TCP parameters, the extract in Oracle GoldenGate (Classic) is able to successfully make the connection to the Oracle GoldenGate (Microservices): Receiver Service on port 16003 and write data to the remote file. By shrinking the TCPFlushBytes and the TCPBufSize below 28K, this avoids a known limitation with Oracle GoldenGate and the usage of RMTTASK and RMTFILE, since they both make the same calls.

To build the extract within Oracle GoldenGat (Classic) the following commands were used:

GGSCI> add extract eil, SOURCEISTABLE

EC2 Instance/GoldenGate Microservices

With the RMTFILE being written successfully to the AWS EC2 platform, an initial load replicat can be built to apply the bulk data to Kafka. The parameter file for the replicat is:

REPLICAT ril

TARGETDB LIBFILE libggjava.so SET property=<location for Kafka properties file>

SOURCEDEFS <location for source def file>

MAP *.*.*, TARGET *.*.*;

Now the exact setting for configuring the connection to Kafka are contained within two properties files. These properties files are used to make the connect and what format the data should be provided in. The first of these files is the Kafka.properties (connection file). This file sets ups the Kafka Handler and any specific items that are needed for the handler. The example that we used is similar to the following:

gg.handlerlist=kafkahandler

#The handler properties

gg.handler.kafkahandler.type=kafka

gg.handler.kafkahandler.kafkaProducerConfigFile=kafka.properties

gg.handler.kafkahandler.topicMappingTemplate=${toLowerCase[${tableName}]}

gg.handler.kafkahandler.keyMappingTemplate=${toLowerCase[${tableName}]}

gg.handler.kafkahandler.mode=op

gg.handler.kafkahandler.Format=json

goldengate.userexit.writers=javawriter

javawriter.stats.display=TRUE

javawriter.stats.full=TRUE

gg.log=log4j

gg.log.level=INFO

gg.report.time=30sec

gg.classpath=/app/orabd/opt/DependencyDownloader/dependencies/kafka_2.7.1/*

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \

username=“<user>" \

password=“<password>";

security.protocol=SASL_SSL

Next we defined a second properties file that defines the connection to Kafka and the associated brokers. As well as setting up the conversion of the data formats and performance tuning items. Our file looked similar to the following:

# address/port of the Kafka broker

bootstrap.servers=<kafka broker servers and ports>

#JSON Converter Settings

key.converter.schemas.enable=false

value.converter.schemas.enable=false

value.serializer = org.apache.kafka.common.serialization.ByteArraySerializer

key.serializer = org.apache.kafka.common.serialization.ByteArraySerializer

#Adjust for performance

buffer.memory=33554432

batch.size=2048

linger.ms=500

After setting the properties file that will be used by Oracle GoldenGate for Big Data to connect to Kafka, we needed to add the replicat to the architecture. This can be done either from the AdminClient or from the HMTL5 web page through the Administration Service. For command line compatibility, the following steps are done through the AdminClient:

AdminClient> add replicat ril, exttrail <RMTFILE>

AdminClient> start replicat ril

At this stage, after starting the replicat (RIL), we are reading the RMTFILE and performing a “direct load” initial load from Tandem to Kafka.

With the “direct load” working, we were pushing approximate 5.4 million records in ~45 minutes. This was a single table load. Depending on the number of tables that need to be loaded, you will need to scale this approach with either more RMTFILEs or multiple replicats.

Enjoy and happy replicating!

Current Oracle Certs

Bobby Curtis

I’m Bobby Curtis and I’m just your normal average guy who has been working in the technology field for awhile (started when I was 18 with the US Army). The goal of this blog has changed a bit over the years. Initially, it was a general blog where I wrote thoughts down. Then it changed to focus on the Oracle Database, Oracle Enterprise Manager, and eventually Oracle GoldenGate.

If you want to follow me on a more timely manner, I can be followed on twitter at @dbasolved or on LinkedIn under “Bobby Curtis MBA”.